Inbound Hack #5: A/B Testing Sample Size Calculator

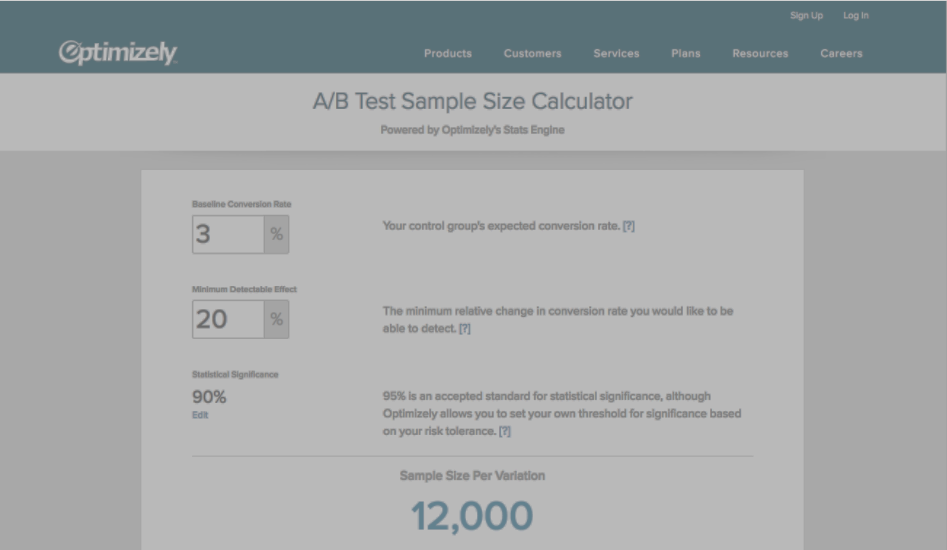

In this video blog, Sean shows you how to calculate the sample size you need for your A/B test using this free online calculator from Optimizely. ...

I know what I am looking for, and would like to chat.

A team of data-driven marketers obsessed with generating revenue for our clients.

Because the proof is in the pudding.

At Campaign Creators we live by three principles: Autonomy, Mastery, Purpose.

5 min read

![]() Tammy Duggan-Herd

:

05/17/18

Tammy Duggan-Herd

:

05/17/18

One of the easier (and most common) types of CRO tests is called an A/B test. A must-have in any marketer’s optimization toolbelt, A/B testing can appear intimidating due to its technical and statistical nature.In reality, it is actually one of the most clear-cut and efficient ways of improving conversion rates. By pinpointing specific variations of your campaign elements which perform well with your audience, A/B tests take the guesswork out of CRO and turn theory into application.

Ready to take the leap? Let’s examine what A/B testing is all about, and discuss some best practices to have you testing like a pro in no time.

For those who prefer to watch rather than read...

Simply put, A/B testing is the marketer’s version of a controlled experiment. Also known as split testing, an A/B test allows you to test variations of some element of a campaign, like a landing page, alongside one another. The results will enable you to determine which version is the most effective option. Because of its fairly straightforward nature, it is one of the most popular tests used in conversion rate optimization.

A/B testing begins with creating two (or more) versions of a piece of content. Any desired variable can be tweaked, and it is considered best practice to make these versions substantially different from one another, but in only one way. The pieces are then presented to similarly sized audiences, and the responsiveness and conversion rates of each group are recorded and analyzed with CRO testing and/or analytics software.

So let’s get down to the nitty-gritty, and examine in detail how an effective A/B test is run.

As with the scientific method, you want to isolate one "independent variable" to test. To clarify, A/B allows for testing of more than one variation, as long as they are being tested one at a time. Any layout or design elements about which you’ve been curious are up for testing, from landing page fonts to CTA button placement to email subject lines. Consider developing a hypothesis to evaluate using your results, and remember to keep things simple-- don’t test multiple variables at once!

The "dependent variable” will be the metric on which you will choose to focus throughout the test. Conversion rate seems the most obvious, but other metrics relevant to CRO can include shopping cart abandonment rate, time spent on a page, bounce rate, and more. Take time to find the KPIs (key performance indicators) most relevant to the specific piece being tested.

Another consideration is the desired statistical significance of your results. Setting your confidence level to a higher percentage is equivalent to investing in the accuracy of results. We see a some poor statistical literacy in the CRO world regarding this topic and suggest this blog on A/B testing statistics to set it right.

The remaining element of the experiment that needs to be determined is the control, which will be the unaltered version of the piece that you will be testing. With control and test created, you can split your audience into equally sized, randomized groups for testing. If this sounds difficult to implement, don’t worry-- it’s a job for your testing tools, a crucial component of your CRO strategy.

Sample size will depend on both the capabilities of the tool being used and the nature of the test. When testing something like a web page, with a constantly increasing number of visitors, the duration of the test will determine sample size directly; examine existing visitation rates to get an idea of how long to conduct it. When A/B testing emails, on the other hand, it’s recommended to test on a certain proportion of your mailing list.

Though your variations should be tested simultaneously, there is nothing wrong with selecting testing times strategically. For instance, well-timed email campaigns will deliver results more quickly; determining what these times are requires some research of your subscriber demographics and segments. As mentioned, depending on the nature of the piece, your site traffic, and the statistical significance that needs to be achieved, the test could take anywhere from a few hours to a few weeks.

If you are interested in gaining some additional insight into the reasoning behind your visitors’ reactions, consider asking for qualitative feedback. Exit surveys and polls can quite easily be added to site pages for the duration of the testing period. This information can add value and efficiency to your results.

Using your pre-established hypothesis and key metrics, it's time to interpret your findings. Keeping confidence levels in mind as well, it will be necessary to determine statistical significance with the help of your testing tool or another calculator. If one variation proves statistically better than the other, congratulations! You can now take action appropriately to optimize the campaign piece.

But just remember statistical significance does not equate to practical significance. You always need to take into consideration the time and effort it will take to implement the change and whether the return is worth it. If its as simple as sending one email template over another with the single click of a button, that's a no brainer. But if it comes down to having a developer revamp 100s of landing pages on your site, you want to make sure it's worth it.

If neither of the variations produced statistically significant results-- that is, the test was inconclusive-- several options are available. For one, it can be reasonable to simply to keep the original variation in place. You can also choose to reconsider your significance level or re-prioritize certain KPIs from the context of the piece being tested. Finally, a more powerful or drastically different variation may be in order. Most importantly, do not be afraid to test and test again; after all, repeated efforts can only help to improve optimization.

Multivariate testing is founded on the same key principle as its A/B counterpart; the difference is in the higher number of variables being tested. The goal is to determine which particular combination of variations performs best, and examine the “convertibility” of each variation in the context of other variables rather than simply a standalone process. In many ways, it can be a more sophisticated practice.

This type of testing is a great way to examine more complex relationships between optimizable elements. In theory, it is possible to test hundreds of combinations out side-by-side! Notedly, multivariate tests have their disadvantages, particularly with regards to the greater amount of time and number of site visitors needed to conduct them effectively.

A/B testing is an ideal method for obtaining significant and apparent results quickly regardless of company size, site traffic, or the capabilities of your software. Easy to interpret and less intimidating to marketers new to CRO, it is in some cases even used in continuous cycles by large companies who prefer it over designing multivariate tests. If you are just starting your journey into the optimization world, this may be the best place to start.

In contrast, multivariate testing is often recommended for sites with substantial traffic to accommodate the number of variations that need to be tested. The specific page being tested also needs to have sufficient exposure. Multivariate tests are best applied when you want to experiment with more subtle modifications to a content piece and trace the interactions of different elements. They’re also useful for obtaining results which can later be systematically applied to your site’s design on a larger scale.

Now that you know how to run an A/B test, how do you know which aspect of your marketing campaign to address first? We've got the perfect resource for you: The Campaign Optimization Checklist. It will help you identify which parts of your campaign are underperforming so you can prioritize your testing and optimization efforts.

This blog is part of Your Definitive Guide to Conversion Rate Optimization blog series

In this video blog, Sean shows you how to calculate the sample size you need for your A/B test using this free online calculator from Optimizely. ...

With conversion rate optimization, strategy tends to be only half the battle. To execute a CRO strategy successfully, marketers must put on their lab...

Producing online traffic via social media, SEO, and even paid search is a great start for a marketing strategy, but certainly not a guarantee that...